Building a robust, web-based application backed by well-grounded software practices

Data Engineering Case Study

properties.trackTitle

properties.trackSubtitle

Overview

Recently, our team delivered a web-based, client-facing application that is now used in the daily underwriting workflow – allowing the client to receive case data and provide model scores through API requests. In this case study, we explore the many design decisions and technical expertise that made it possible to build and integrate a non-trivial underwriting solution within a short timeframe. This process is the software development and deployment cycle in action, customized to align with the evolving insurance industry and our client's specific needs.

Table of Contents

Development

Designing the application

| App Component | Technology | What is it? | Why is it used? |

|---|---|---|---|

| Frontend | ReactJS | JavaScript framework for creating interactive user interfaces (UI). | Provides an easy way to create a dashboard to display statistics about the cases submitted. |

| Parcel | Web application bundler, which combines the multiple assets that are needed for the UI (HTML, CSS, and JavaScript). | Makes the UI more efficient to run on modern web browsers. | |

| Backend | Flask (with gunicorn) | Python libraries and tools for creating web-based applications. | Allows for lightweight applications that can handle complexity. Also used to distribute API request loads. |

| CosmosDB | A NoSQL Azure database that supports SQL querying. Enables data retrieval via PowerBI. | Stores the parsed details of requests submitted. It is designed for fast retrieval of items and does not require a schema. | |

| Azure Blob Storage | Storage for unstructured data (like model object files). | Provides a simple service for saving and loading files in the cloud. | |

| Deployment | Docker | Designed to make it easier to create, deploy, and run applications by using containers. | Allows a developer to package up the needed parts for an application (libraries, dependencies, etc.) and ship it as one package. |

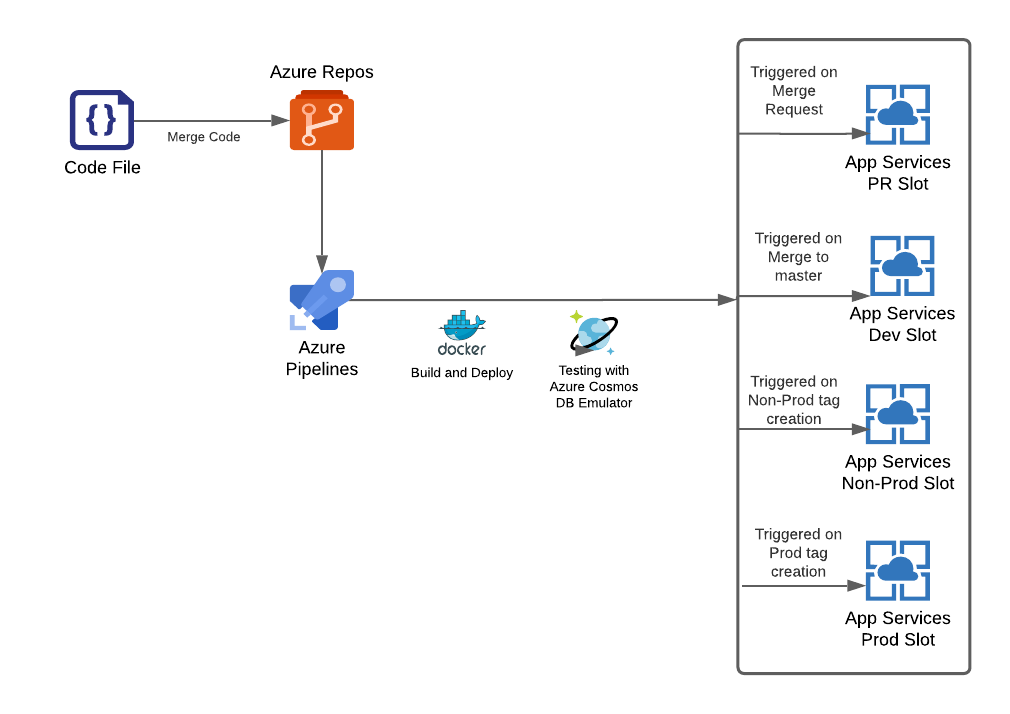

| Azure Pipeline and Azure App Service | Continuous integration and continuous deployment (CI/CD) tool. Code is deployed to “slots” set up in App Service, with separate regions for Development, Non-Production (also called UAT), and Production. | Streamlines the integration of new changes into the system by automatically building, testing, and deploying application code. |

Deployment

Making the application available to the client

- Developer commits new changes via pull requests to Azure Repos.

- If the tests pass, another developer will peer-review and approve changes.

- On approval, the Azure Pipeline is triggered to create containerized development instances of the application, run automated unit tests and check code coverage.

- Once the application is tested in the application's development region, Azure Pipeline is triggered to deploy the new code to a User Acceptance Testing (UAT) environment, which is otherwise identical to the production environment where the final app runs.

- The client runs regression tests on this copy of the application to validate the changes.

- Upon client approval, the change to the version of the application moves to production.

Once the application is live, the client uses API calls to submit the fields required to predict each applicant. In return, it receives a real-time response that contains the model output and any other calculated fields. Clients are generally well equipped to integrate these responses into their workflows, though data engineering also assists along the way to ensure a smooth process. Other integrated functionalities of Azure Cloud Services, such as health monitoring, are used to check the live production application's availability and responsiveness. This automatic process can restart parts of the application to make sure it is always live and working for the client.

Testing is consistent throughout both development and deployment to ensure that the application acted as expected. During development, unit tests, or tests that cover small, well-defined portions of the code, are run on sample data. More technically challenging tests verify that reading and writing to databases were working correctly. For this, Azure's CosmosDB Emulator is used to make mock connections to local copies of the database to test the application without incurring cloud storage costs. Finally, user acceptance testing on a parallel but isolated copy of the production app is vital to ensure the process worked as desired from a business user's perspective.

Security

Protecting the application

Security is paramount since data submitted to the API contains sensitive information, and the model response is also proprietary. During development, sensitive material was received from the client using Secure File Transfer Protocol (SFTP) rather than email. SFTP provides a secure means of transferring files. As part of the deployment, all traffic to and from the API is securely encrypted. To ensure no malicious user can make API requests, the API endpoint is accessible only to users with an API access key that Azure App Services have authenticated. Also, the production and non-production app instances are isolated and use different databases with different access keys. If a malicious user ever received access to the API key, that user could post requests but would not read any of the information stored in the databases. Logging can also assist in detecting intrusion attempts by detecting a suspicious web crawling pattern, and the app is made temporarily unavailable while the event is investigated.

Finally, the entire Azure infrastructure is contained in a virtual network. A virtual network isolates the resources inside the network from the public. All virtual network resources can communicate using private IP addresses, but the network is generally hidden from the public. Azure Firewall also monitors and controls the incoming and outgoing network traffic based on predetermined security rules. This establishes a barrier between a trusted internal network and untrusted external network, such as the Internet, and provides another security layer.

Security is paramount since data submitted to the API contains sensitive information, and the model response is also proprietary.

Conclusion

This case study presents a high-level view of the decisions and processes that our Data Engineering team undertook to deploy machine learning model predictions as a web-based service for our client. Throughout, we considered various choices for cloud architecture and security, with some options ruled out due to the client's requirements or after testing. As Data Engineers, we strive to improve. Our continuous integration/continuous deployment (CI/CD) process was also enhanced over time. For example, resource allocation between the production and non-production applications was modified to optimize performance while managing costs. Currently, the application is stable in production and returning secure, real-time results to the client.

Integrated Analytics provides white-labeled analytics solutions for Munich Re's North America Life clients. To that end, Data Science and Data Engineering will work hand in hand with clients to develop products that meet business needs and technical requirements.

Contact Us